Predict Human Actions

Details

This project was project #3 for Dr. Zhang’s Human Centered Robotics (CSCI473) class at the Colorado School of Mines during the Spring 2020 semester. It was designed to provide an introduction to machine learning in robotics though the use of Support Vector Machines (SVM). The original project deliverables/description can be viewed here.

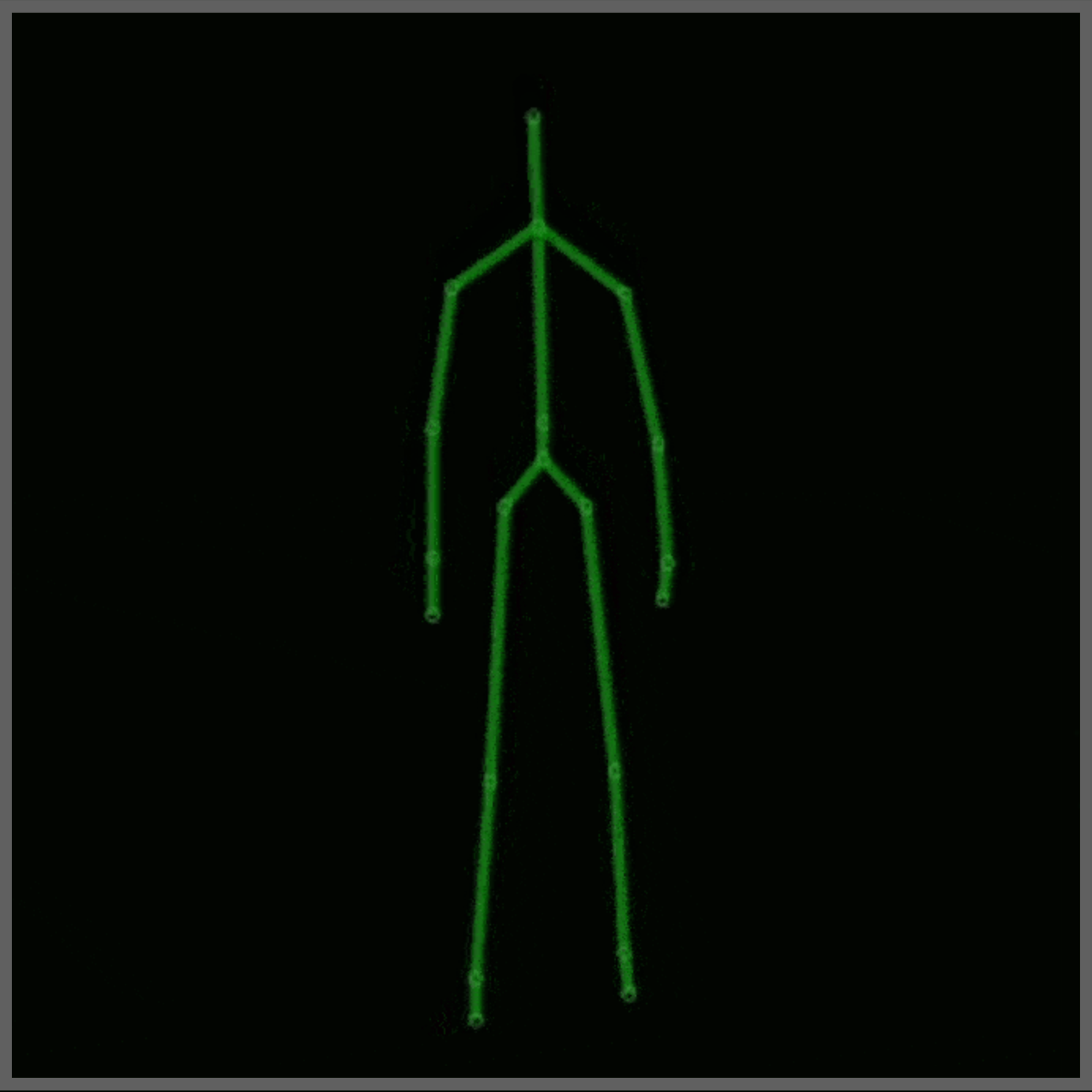

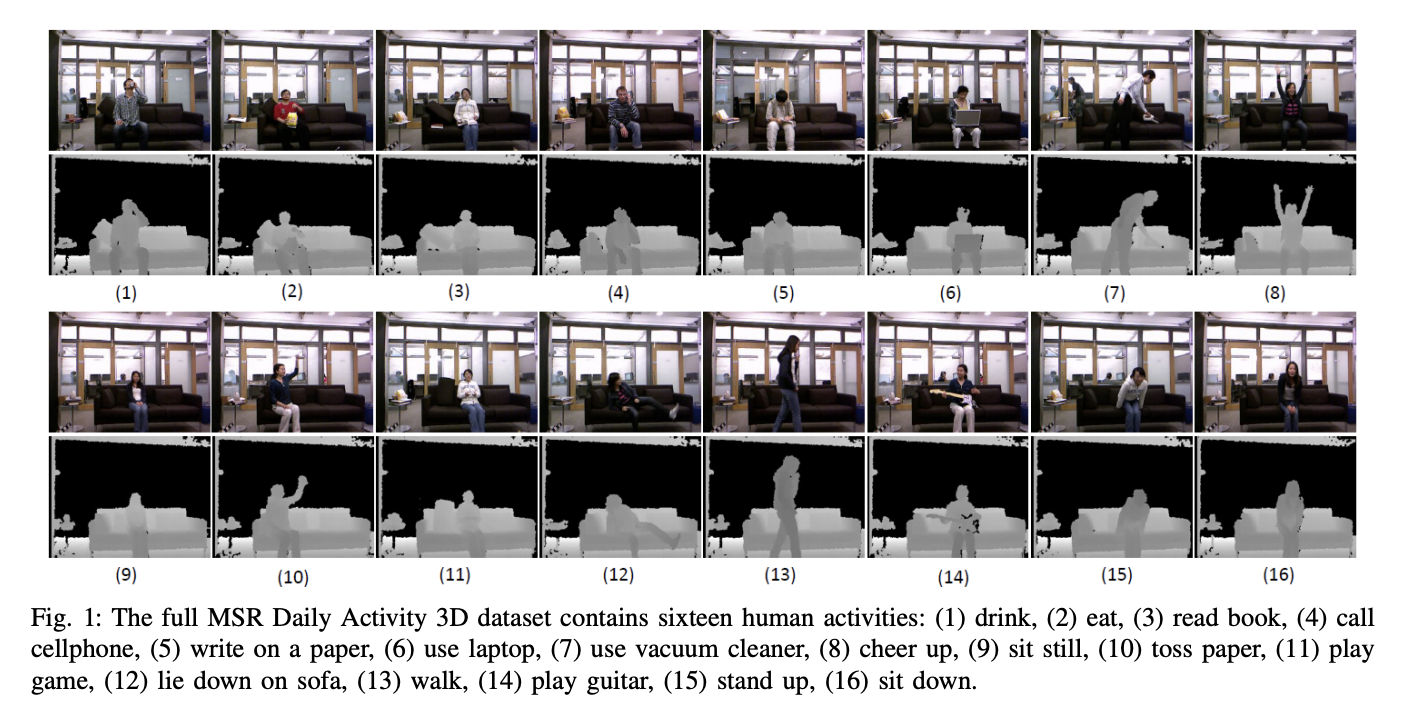

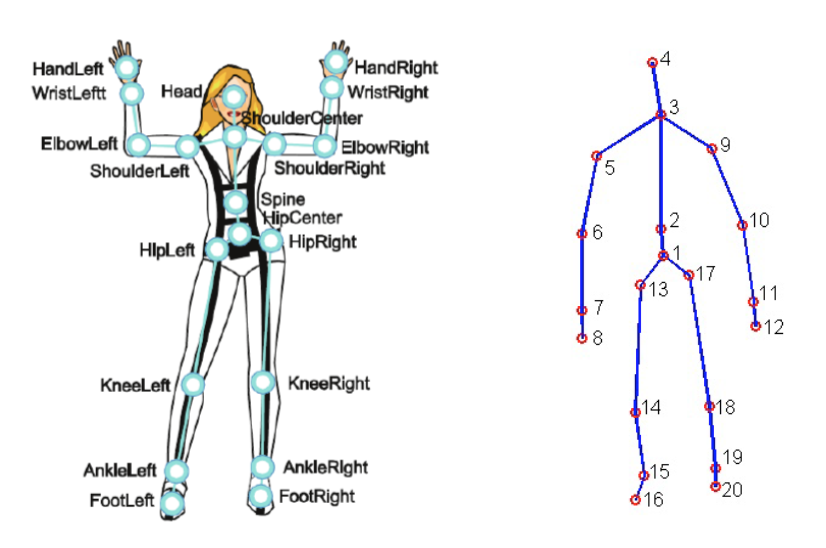

For this project the MSR Daily Activity 3D Dataset (Figure 2), with some modifications, was used. This dataset contains 16 human activities gathered from a Xbox Kinetic sensor and stored as skeletons. Skeletons is an array of real world, (x, y, z), coordinates of 20 joints of a human recorded in one frame. Here is a figure that shows what a skeleton is:

To achieve human action prediction, the raw data most be represented in a form that can be processed by a SVM. For this project, the following representations were used:

- Relative Angles and Distances (RAD) representation

- Histogram of Joint Position Differences (HJPD) representation

For classification, the representation(s) is sent into a SVM, powered by LIBSVM, to create a model that can predict human actions. Two models will be created, one using RAD and another one using HJPD. The goal is to make these models as accurate as possible and see which representation performs the best.

Knowing this, here is an overview of what the code does:

- Load the raw data from the modified dataset

- Remove any outlier and/or error data from the loaded dataset

- Convert the final raw data into RAD and HJPD representations

- The representations are sent into tuned SVM(s) to generate two models

- The two models are then fed test raw data and a confusion matrix is generated to measure how the the model(s) performed.

Results

After running the code and tunning the models to the best of my ability, here are the final confusion matrix for both the RAD and HJPD models:

Representation: RAD

Accuracy: 62.5%

LIBSVM Classification 8.0 10.0 12.0 13.0 15.0 16.0

Actual Activity Number

8.0 8 0 0 0 0 0

10.0 1 5 0 0 1 1

12.0 0 1 1 0 3 3

13.0 0 0 0 6 1 1

15.0 0 0 0 1 5 2

16.0 0 0 0 0 3 5

Representation: HJPD

Accuracy: 70.83%

LIBSVM Classification 8.0 10.0 12.0 13.0 15.0 16.0

Actual Activity Number

8.0 7 1 0 0 0 0

10.0 1 5 0 0 0 2

12.0 0 0 7 0 1 0

13.0 2 0 1 5 0 0

15.0 0 0 0 0 7 1

16.0 0 2 0 0 3 3

Conclusion

Sense both accuracies are over 50%, this project was a success. Also, the HJPD representation seems to be the more accurate representation to use for this classifications. With this, there is a model(s) that predicts human actions using skeleton data. The model(s) here are far from perfect but it is better then random. This project was what gave birth to the Moving Pose project later on.

Additional Notes:

- This project was tested on Python version 3.8.13

- For this project, the complete MDA3 dataset and a modified MDA3 dataset is used. The modified MDA3 only contains activities 8, 10, 12, 13, 15, & 16. Also the modified version has some “corrupted” data points in it well the complete dataset does not.

- Sources:

- Information About Representations (RAD, HJPD, etc):

- Information About The Xbox Kinetic Sensor:

- Information About SVM(s) & LibSVM:

- Installing LIBSVM:

- SVM & LIBSVM Logic & Documentation:

- Information About The Dataset Used/Modified: